Docker-Swarm网络

Docker-Swarm有两个网络

docker_gwbridgeingress

docker_gwbridge是一个bridge网络,负责,容器与主机通信

ingress是一个overlay2的虚拟的二层覆盖网络,这个网络用于将服务暴露给外部访问,docker swarm就是通过它实现的routing mesh(将外部请求路由到不同主机的容器)。

Docker-Swarm中的容器如何与其他主机上的容器通信

Docker-Swarm会创建一个ingessshell[root@manager ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 2d7d0c914941 bridge bridge local d90826abad05 docker_gwbridge bridge local 5987468e9e42 host host local q79wmy2m5tc5 ingress overlay swarm #自动创建的网络,但是我的主机上有点问题,创建的ingress网络与主机网络的相同,我对这个网络进行过更换 e71fb6d1afcb none null local查看

ingress的网络详情shell[root@manager ~]# docker inspect ingress ..... "IPAM": { "Driver": "default", "Options": null, "Config": [ { "Subnet": "10.11.0.0/16", "Gateway": "10.11.0.2" } ] }, .....可以看到

ingress的子网为10.11.0.0/16创建一个服务

如果不对端口指定端口,容器只连接上

docker0网桥,当将容器的端口进行对外暴露时,才会接入ingress和docker_gwbridgeshelldocker service create --replicas 3 -p8080:80 --name test_1 busybox:sleep查看各个容器的ip:

Manager

Bridge:172.18.0.3/16

overlay:10.11.0.14/16shell[root@manager ~]# docker exec test_1.3.ptazpkyc9ksu27o0ra47k2sxd ip a ... 26: eth0@if27: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1150 qdisc noqueue link/ether 02:42:0a:0b:00:0e brd ff:ff:ff:ff:ff:ff inet 10.11.0.14/16 brd 10.11.255.255 scope global eth0 valid_lft forever preferred_lft forever 28: eth1@if29: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff inet 172.18.0.3/16 brd 172.18.255.255 scope global eth1 valid_lft forever preferred_lft forever

Node01

Bridge:172.18.0.3/16

overlay:10.11.0.12/16shell[root@node01 ~]# docker exec test_1.1.rpi44vea4kvwgcsfipdlj4m5o ip a ..... 22: eth0@if23: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1150 qdisc noqueue link/ether 02:42:0a:0b:00:0c brd ff:ff:ff:ff:ff:ff inet 10.11.0.12/16 brd 10.11.255.255 scope global eth0 valid_lft forever preferred_lft forever 24: eth1@if25: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff inet 172.18.0.3/16 brd 172.18.255.255 scope global eth1 valid_lft forever preferred_lft forever

Node02

Bridge:172.18.0.3/16

overlay:10.11.0.13/16shell[root@node02 ~]# docker exec test_1.2.yz0fujii835wtqmlpposu1aqh ip a ... 22: eth0@if23: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1150 qdisc noqueue link/ether 02:42:0a:0b:00:0d brd ff:ff:ff:ff:ff:ff inet 10.11.0.13/16 brd 10.11.255.255 scope global eth0 valid_lft forever preferred_lft forever 24: eth1@if25: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether 02:42:ac:12:00:03 brd ff:ff:ff:ff:ff:ff inet 172.18.0.3/16 brd 172.18.255.255 scope global eth1 valid_lft forever preferred_lft forever三个容器的

bridge的bridge网络地址是相同的,overlay是不同的,不同主机间的容器是使用overlay的网络进行通信的shell[root@manager ~]# docker exec test_1.3.ptazpkyc9ksu27o0ra47k2sxd ping -c4 10.11.0.13 PING 10.11.0.13 (10.11.0.13): 56 data bytes 64 bytes from 10.11.0.13: seq=0 ttl=64 time=0.361 ms 64 bytes from 10.11.0.13: seq=1 ttl=64 time=2.727 ms 64 bytes from 10.11.0.13: seq=2 ttl=64 time=0.701 ms 64 bytes from 10.11.0.13: seq=3 ttl=64 time=0.608 ms --- 10.11.0.13 ping statistics --- 4 packets transmitted, 4 packets received, 0% packet loss round-trip min/avg/max = 0.361/1.099/2.727 ms

bridge网络是用来与宿主机进行通信,并且能与外网进行通信shell[root@manager ~]# docker exec test_1.3.x0i66md3ks9u0t1idhn9u00f4 route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 172.18.0.1 0.0.0.0 UG 0 0 0 eth1 10.11.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0 172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth1先使用

ping外网shell[root@manager ~]# docker exec test_1.3.x0i66md3ks9u0t1idhn9u00f4 ping -c 4 www.baidu.com PING www.baidu.com (112.80.248.75): 56 data bytes 64 bytes from 112.80.248.75: seq=0 ttl=127 time=16.433 ms 64 bytes from 112.80.248.75: seq=1 ttl=127 time=16.223 ms 64 bytes from 112.80.248.75: seq=2 ttl=127 time=17.008 ms 64 bytes from 112.80.248.75: seq=3 ttl=127 time=16.623 ms --- www.baidu.com ping statistics --- 4 packets transmitted, 4 packets received, 0% packet loss round-trip min/avg/max = 16.223/16.571/17.008 ms将容器与

docker_gwbridge断开连接shell[root@manager ~]# docker network disconnect docker_gwbridge test_1.3.ptazpkyc9ksu27o0ra47k2sxd Error response from daemon: container 8efd61d20463d1f0bfb526542d365e6fb42e2636093d75df6ee09170e905d4d1 failed to leave network docker_gwbridge: container 8efd61d20463d1f0bfb526542d365e6fb42e2636093d75df6ee09170e905d4d1: endpoint create on GW Network failed: endpoint with name gateway_fd1e316fc655 already exists in network docker_gwbridge

ping外网shell[root@manager ~]# docker exec test_1.3.x0i66md3ks9u0t1idhn9u00f4 ping -c 4 www.baidu.com ^C显示无法

ping外网

Docker-Swarm会有一个名称空间,里面包含这个网段的网桥,所有接入overlay网络的容器都会连接到该网桥查看

docker网络名称空间shell[root@manager ~]# ll /run/docker/netns/ total 0 -r--r--r-- 1 root root 0 May 17 16:25 1-q79wmy2m5t -r--r--r-- 1 root root 0 May 17 19:10 7035cc6a4c3a #这个网络名称空间里面存在着ingress网络的网桥br0 -r--r--r-- 1 root root 0 May 17 16:25 ingress_sbox #下面会写到进入网络名称空间:

可以看到网桥的

IPshell[root@manager ~]# nsenter --net=/run/docker/netns/1-q79wmy2m5t ip a ......... 2: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1150 qdisc noqueue state UP group default link/ether 4a:12:15:4c:27:e9 brd ff:ff:ff:ff:ff:ff inet 10.11.0.2/16 brd 10.11.255.255 scope global br0 valid_lft forever preferred_lft forever 11: vxlan0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1150 qdisc noqueue master br0 state UNKNOWN group default link/ether 4a:12:15:4c:27:e9 brd ff:ff:ff:ff:ff:ff link-netnsid 0 13: veth0@if12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1150 qdisc noqueue master br0 state UP group default link/ether a6:0f:6e:b1:3d:c9 brd ff:ff:ff:ff:ff:ff link-netnsid 1 37: veth5@if36: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1150 qdisc noqueue master br0 state UP group default link/ether ea:5f:00:41:94:fc brd ff:ff:ff:ff:ff:ff link-netnsid 2下面还有两个

veth设备对,设备号前面的是本名称空间的设备的设备号,而后面的另外一个数字是和它一对的设备的设备号,比如这条:37: veth5@if36: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1150 qdisc noqueue master br0 state UP group default,这个设备在本名称空间的设备号为37,而相对应的他的另一端的设备号应该是36,另一端一般在容器中,看这个master br0可以看出而设备号为37的这个设备连接到br0上来验证这一点:

shell[root@manager ~]# docker exec test_1.3.nrm0chgn4m9f5xeh4665d1854 ip a ..... 36: eth0@if37: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1150 qdisc noqueue link/ether 02:42:0a:0b:00:10 brd ff:ff:ff:ff:ff:ff inet 10.11.0.16/16 brd 10.11.255.255 scope global eth0 valid_lft forever preferred_lft forever ......可以看到是这样的,这样,容器就将流量发往

ingress网络的网关从而发往各个节点,其它节点的overlay网络也是这样通信,可以说veth对都是这样通信的,一端放在容器中做网桥,一端连接网桥

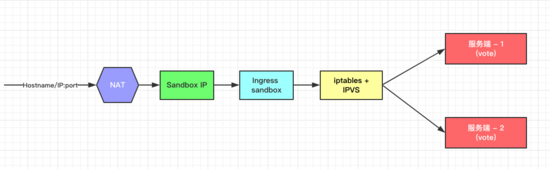

Docker-Swarm如何完成负载均衡

先创建一个服务:

创建一个服务,由三个nginx容器组成,将宿主机的80端口映射到容器的80端口

shelldocker service create --replicas 3 --name test_1 -p 8080:80 nginx等其创建好了过后,可以看到宿主机上有这样一条

iptables规则:shell-A DOCKER-INGRESS -p tcp -m tcp --dport 8080 -j DNAT --to-destination 172.18.0.2:8080将目标端口是本机的数据包发往

172.18.0.2/24上看看路由的情况:

所有网段为172.18.0.0网段的机器会将数据包发往

docker_gwbridge上shell[root@manager ~]# route -n Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface ....... 172.18.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker_gwbridge而

172.18.0.2在一个叫做ingress_sbox的名称空间内,进去看看他的IP情况,可以看到这里的172.18.0.2的设备号为14,对端设备号为15shell[root@manager ~]# nsenter --net=/run/docker/netns/ingress_sbox ip a ....... 12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1150 qdisc noqueue state UP group default link/ether 02:42:0a:0b:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 10.11.0.4/16 brd 10.11.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.11.0.20/32 brd 10.11.0.20 scope global eth0 valid_lft forever preferred_lft forever 14: eth1@if15: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 1 inet 172.18.0.2/16 brd 172.18.255.255 scope global eth1 valid_lft forever preferred_lft forever数据报文从

docker_gwbridge到ingress_sbox的过程,和前面的veth是一样的,可以看到172.18.0.2的另一端的设备为15,而15在宿主机的名称空间上shell[root@manager ~]# ip a|grep 15.*14 15: veth8a6ad36@if14: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker_gwbridge state UP group default而这个设备连接在

docker_gwbridge上报文到达

ingress-sbox再进行iptables的流量转发shell[root@manager ~]# nsenter --net=/run/docker/netns/ingress_sbox iptables-save -t mangle # Generated by iptables-save v1.4.21 on Mon May 17 20:44:00 2021 *mangle ....... -A PREROUTING -p tcp -m tcp --dport 8080 -j MARK --set-xmark 0x102/0xffffffff -A INPUT -d 10.11.0.11/32 -j MARK --set-xmark 0x102/0xffffffff COMMIT一个报文到达

ingress_sbox上后,dstIP为172.18.0.2,dstPort为8080,这就匹配了第一条规则,iptables将这个报文mark上0x102,0x102的十进制数为258shell[root@manager ~]# nsenter --net=/run/docker/netns/ingress_sbox ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn FWM 258 rr -> 10.11.0.12:0 Masq 1 0 0 -> 10.11.0.13:0 Masq 1 0 0 -> 10.11.0.16:0 Masq 1 0 0将标记为

258的报文转发到下面三个IPshell[root@manager ~]# nsenter --net=/run/docker/netns/ingress_sbox iptables-save -t nat *nat ..... -A POSTROUTING -d 10.11.0.0/16 -m ipvs --ipvs -j SNAT --to-source 10.11.0.4 COMMIT这是一个

NAT表的规则,将目标地址为10.11.0.0/16网段的报文的源地址转换为10.11.0.4,Docker-swarm的节点的这个IP都不相同,可以根据这个IP,每个请求哪个主机发出的

Manager主机每个主机都有自己的一个

IP,用来访问后端服务,还有一个VIP不知道有啥用shell[root@manager ~]# nsenter --net=/run/docker/netns/ingress_sbox ip a ....... 12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1150 qdisc noqueue state UP group default link/ether 02:42:0a:0b:00:04 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 10.11.0.4/16 brd 10.11.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.11.0.20/32 brd 10.11.0.20 scope global eth0 valid_lft forever preferred_lft forever

Node1shell12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1150 qdisc noqueue state UP group default link/ether 02:42:0a:0b:00:09 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 10.11.0.9/16 brd 10.11.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.11.0.20/32 brd 10.11.0.20 scope global eth0 valid_lft forever preferred_lft forever

Node2shell12: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1150 qdisc noqueue state UP group default link/ether 02:42:0a:0b:00:0a brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet 10.11.0.10/16 brd 10.11.255.255 scope global eth0 valid_lft forever preferred_lft forever inet 10.11.0.20/32 brd 10.11.0.20 scope global eth0 valid_lft forever preferred_lft forever到这个为止,报文的目的端口还是

8080,并没有变成80,所以在所有的容器的网络名称空间中都有这样一条iptables规则存在于PREROUTING上shell-A PREROUTING -d 10.11.0.21/32 -p tcp -m tcp --dport 8080 -j REDIRECT --to-ports 80当目的地址为自身的服务

IP时,就会将目的端口转换为80,这样就完成了负载均衡